Research Methodologies in Dovetail

Modular Research Approach

At Hammerhead, we have developed interrelated products and experiences. Given the wide array of user journeys across these products and the variety of internal team needs, we've adopted a versatile research methodology that prioritizes adaptability and focuses on insights.

Insight Generation

We define insights as user observations supported by concrete evidence. A typical example at Hammerhead is a Dovetail Insight, which combines a succinct summary with excerpts from interview transcripts.

Pattern-Based Research (Inductive)

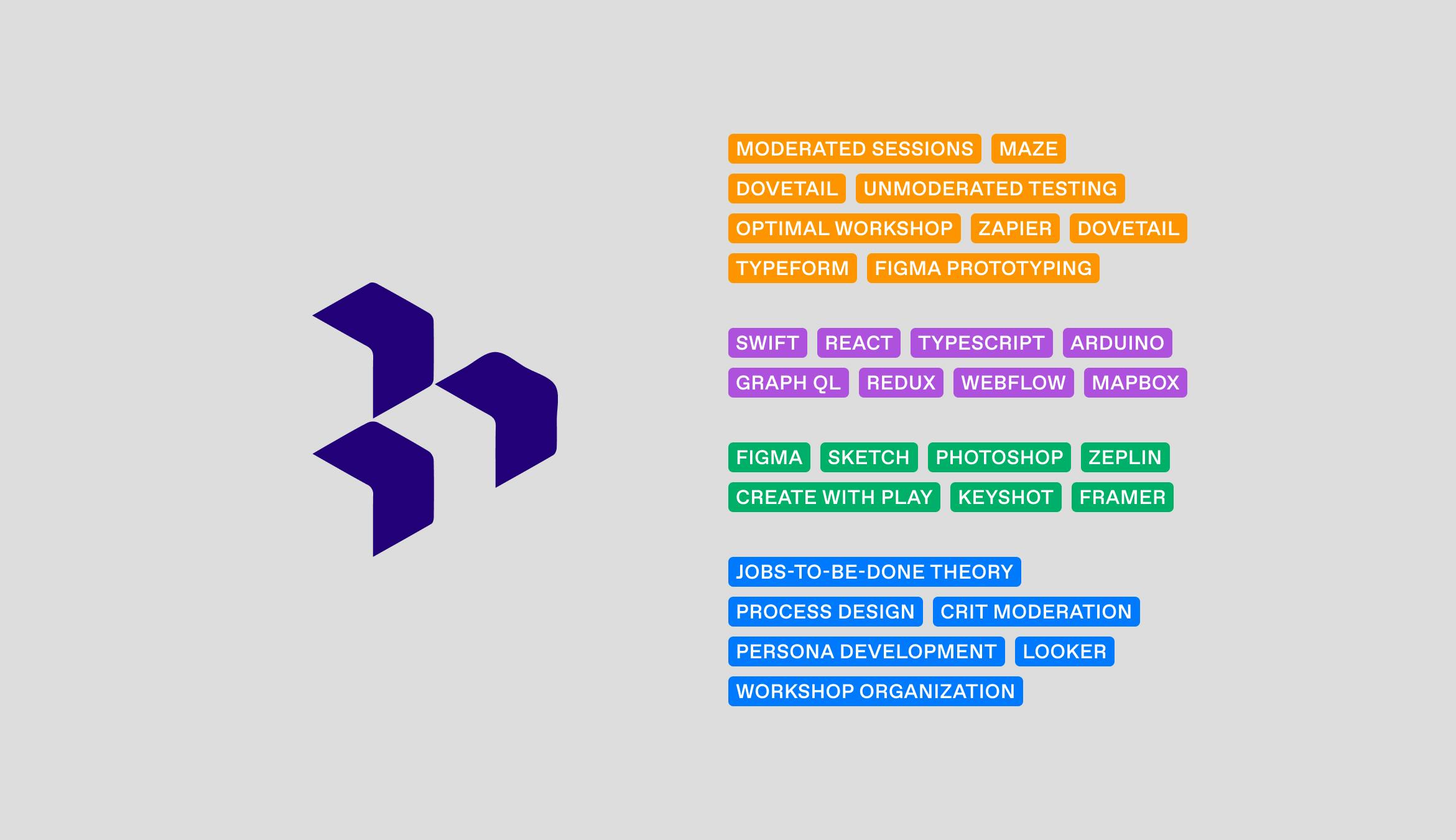

Our research often emerges from trends observed across various research activities, including customer interviews, churn analysis, and feature-specific studies. I've also built a Zapier bot for our Slack team that accepts any unstructured form of user feedback and injects it into Dovetail. As we sift through this dataset on a regular basis, we highlight and annotate trends. These insights are predominantly qualitative, revealing unforeseen user behaviors. Sometimes tagging data classifies context in which the transcript is taking place. Features based on these insights are usually further validated through subsequent rounds of Hypothesis-Based research.

Hypothesis-Based Research (Deductive)

This type of research is hypothesis-driven, originating from industry knowledge, observed user behaviors, or competitive analysis. At Hammerhead, this often involves analyzing of design iterations with unmoderated user interviews, or moderated, asynchronous testing with Maze. The insights generated from this style of research lean towards quantitative analysis and occasionally suggests the causes behind behaviors. Hypothesis-based findings are typically cross-verified with subsequent Pattern-Based research.

Taxonomies

Project-Specific Tags

These tags are exclusive to a particular research project, denoting context-specific user motivations, design choices, or direct user feedback. They are used within the project's scope and are organized according to the researcher's preferred method. Such tags evolve with the research, starting abstract and gradually become more refined. Limiting these tags to their respective projects allows for focused research without impacting other studies, adding noise.

Universal Tags

Universal tags are applied across all research projects. In Dovetail, they use the term "Extensions" to refer to universal tags. They identify universally relevant user emotions, as well as ongoing tracking of products, experiences, or third-party interactions. These tags are organized on universal boards, accessible to any project, and are color-coded for ease of reference. Universal tags facilitate long-term and high-level tracking. Occasionally, a project-specific tag may gain enough relevance to be included on a universal board, but more commonly, universal tags are drawn from existing boards.

Tagging Example

Consider a transcript discussing the Off-Route CLIMBER feature. Initial tagging might focus on project-specific elements like user hesitations or the perceived value of the feature. Follow up tagging would involve universal patterns such as user sentiment and the software feature discussed.

Dual Tagging: Applying multiple tags to a statement enriches the dataset.

Conservative Tagging: Merging tags onto universal boards or maintaining seemingly overlapping project tags is easier. Hence, a cautious approach in tagging is recommended.

Search & Filter Example

The true utility of universal tags becomes evident by the search capabilities they enable. For instance, to gauge user sentiment about a specific rear bike radar, one might filter for mentions of the radar, then further refine the search with sentiment tags. This process can lead to new insights or validate existing hypotheses.

Collaborators

Steve Winchell and I developed these methodologies and tagging structures together through trial and error during the development of Karoo 2.

References

Foundations of Atomic Research by Tomer Sharon

Dovetail

Workspace Tags at Dovetail